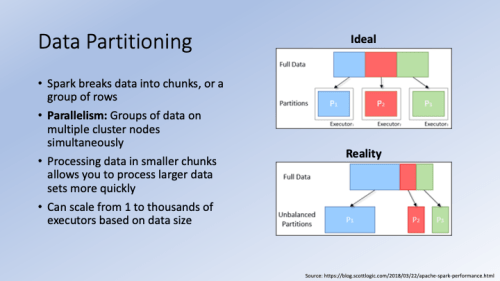

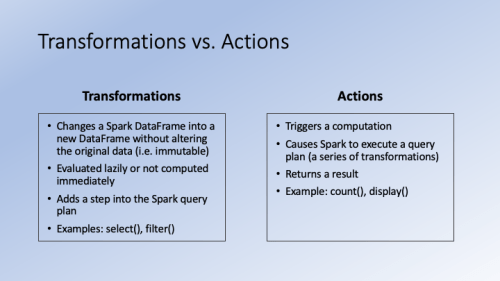

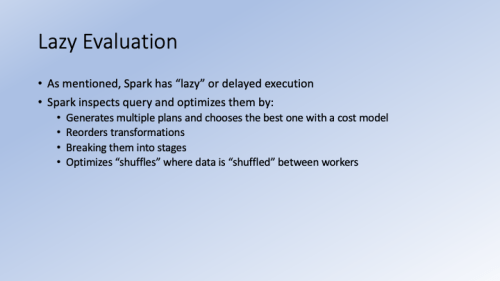

In this session we cover ways to optimize PySpark code. This includes descriptions of situations where slowness may occur, for example, uneven partitions and skewed joins. To combat these issues I explain repartitioning/coalescing and broadcast joins. I also explain how to place your data in memory or on disk to cache commonly used data sets. Finally, I show the interface where you can monitor memory and CPU usage to make sure you are using the optimal cluster size.

Lastly, I show how to use multiple languages inside of one Databricks notebooks including SQL and R code.

Data Available Here and Code Available Here

Suggested Reading:

- Spark: The Definitive Guide, Chapter 8 (p. 139-149) and Chapter 19 (p. 315-329)

- Learning Spark, 2nd Edition, Chapter 7 (p. 173-205)